As energy scarcity and environmental protection become increasingly prominent issues, liquid cooling technology, as an efficient and energy-saving solution, is continuously adopted by data centers to achieve sustainable development. In this first chapter, we explore the background and advantages of liquid cooling technology, as well as the reasons why liquid cooling technology is widely applied in the current era.

Table of Contents

Part 1/ Why Do We Need Liquid Cooling?

– Heat dissipation is crucial for data center stability.

– Liquid cooling technology serves as an external supplementary heat dissipation method.

– Liquid cooling technology involves transferring the heat generated by IT equipment to an external cold source circulation system.

Part 2/ Why Has Liquid Cooling Become a Explosive Growth Point?

– Technological bottlenecks, costs, and sustainability drive the demand for liquid cooling.

– Liquid cooling technology addresses the heat dissipation challenges of high-power devices.

– Liquid cooling technology enhances energy efficiency, reducing operational costs and meeting energy efficiency requirements.

Drivers of Liquid Cooling Demand — Demand-Driven and Inflection Point — AI

– The development of AI technology has led to the emergence of large-scale models.

– Liquid cooling technology caters to the heat dissipation needs of high-power devices.

– The development of liquid cooling technology aligns with energy scarcity and environmental protection demands.

– AI’s application in training and inference stages necessitates substantial liquid cooling support.

Part 1: Why Liquid Cooling?

The reason humans are considered the “King of the creatures” is due to our powerful brains and robust muscles, allowing us to walk upright. What is often overlooked is that humans also possess the most potent heat dissipation capability among animals.

Imagine, for instance, a cheetah that can run at speeds of 120 kilometers per hour but can only sustain this speed for 60 seconds. If the cheetah fails to catch its prey within these 60 seconds, it must abandon the chase. If it repeatedly sprints at such high speeds within a day without securing food, it might eventually die. How do humans manage this feat? Nowadays, many individuals participate in marathon races, with the faster ones completing the race in around two to three hours, and the slower ones perhaps taking six hours. However, they all manage to persist until the finish line. This endurance is possible due to humans possessing excellent heat dissipation capabilities, providing us with impressive stamina.

In the context of data centers, heat dissipation is vital for the stability of the entire data center and its infrastructure. Simultaneously, we aim to ensure the reliable operation of the entire IT system using the least amount of electricity.

Using a similar analogy, humans employ various techniques for heat dissipation. For example, we use our internal mechanisms such as breathing, skin, well-developed sweat glands, and blood circulation to dissipate heat. Apart from these intrinsic heat dissipation mechanisms, as the “crown of creation,” humans can also utilize external methods to aid heat dissipation. For instance, if I find the air conditioning provided at an event not sufficiently cool, I can take a shower to cool down through water spray, or I can choose to go swimming.

As we all knows, swimming represents the ultimate form of heat dissipation. While discussing liquid cooling technology, many people refer to the portion that involves using liquid for heat dissipation as liquid cooling technology. However, genuine liquid cooling technology refers to a part of the data center’s internal circulation system, specifically how to transfer the heat generated by IT equipment to the internal circulation section of the external cold source loop.

Key aspects related to liquid cooling technology, such as the heat dissipation per square centimeter, present significant challenges when discussing liquid cooling technology. Similarly, the human brain consumes approximately 20% of the body’s energy, about 24 watts. Although this might not seem like much, when the brain operates at high speeds, one might feel the head heating up, and the head is prone to sweating. Moreover, when the brain overheats, thinking speed decreases. This actually illustrates that the human brain, as the most critical component of the body, also possesses a critical role in maintaining the stable operation of the entire system.

Part 2. Why Liquid Cooling Has Become an Explosive Growth Point

Reasons Driving the Demand for Liquid Cooling — Technological Bottlenecks, Costs, Sustainability.

The reasons behind the surge in demand for liquid cooling are multi-fold. Firstly, the development of IT technology has led to a stagnation in air cooling efficiency. Moreover, in the case of CPUs and GPUs, the power consumption of CPUs has escalated from 205 watts in 2017 to 350 watts today, with a rapid growth rate. It’s even possible for future power levels to reach unimaginable numbers like 500 or 600 watts.

Furthermore, the latest GPU version, H100, consumes up to 700 watts. In many scenarios, air cooling is insufficient to maintain chip stability. In addition, NVIDIA’s test results have demonstrated that under similar performance conditions, liquid cooling can reduce energy consumption by 30%.

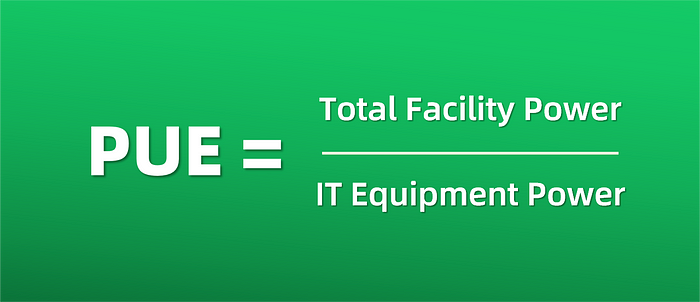

But what does a 30% energy savings really mean? Primarily, for the entire data center, a substantial reduction in energy consumption is crucial. This meets PUE requirements and lowers energy benchmarks. For businesses, it signifies the potential for more robust computing capabilities within limited resources. Approaching the critical threshold of 20%-30%, many factors become pivotal in determining the sustainability of operations for enterprises.

Furthermore, liquid cooling technology boasts energy efficiency advantages. Energy efficiency translates to lower operational costs and cost savings when meeting energy benchmarks. It also implies more effective utilization of power metrics in the realm of power supply.

At present, PUE (Power Usage Effectiveness) guidance in European and American countries can vary due to diverse standards, organizations, and regions. For instance:

The Green Grid: A nonprofit organization dedicated to advancing data center energy efficiency, The Green Grid commonly proposes a PUE requirement of below 1.5. This standard is widely recognized and applicable across many countries.

U.S. Department of Energy: Providing PUE recommendations, the U.S. Department of Energy typically suggests a PUE range from 1.2 to 1.5. The specific range depends on the scale and nature of the respective data center.

European Code of Conduct for Data Centres: An initiative spanning Europe, this code encourages energy efficiency in data centers. The code recommends a PUE below 2.0, while also promoting the use of additional metrics for a comprehensive assessment of energy efficiency.

ASHRAE: The American Society of Heating, Refrigerating and Air-Conditioning Engineers offers multiple standards for data center energy efficiency, including ASHRAE 90.4–2019. According to this standard, efficient data centers should maintain a PUE of 1.3 or lower.

These requirements not only indicate that liquid cooling technology is suitable for these scenarios but also drive its rapid advancement.

Reasons Driving Liquid Cooling Demand — Demand-Driven and Inflection Point — AI

Since the end of last year, ChatGPT has become a highly anticipated technological innovation, sparking widespread attention and discussions. The emergence of ChatGPT signifies a significant breakthrough in the field of artificial intelligence. Various representative models have been introduced, each aiming to lead the era, fostering optimistic expectations within the industry. To a certain extent, the First Industrial Revolution transitioned from steam engines to internal combustion engines to electrification. But what does Industry 4.0 represent? People have been contemplating what could represent Industry 4.0 or whether there are significant events in the new information revolution. In my opinion, today’s representation, exemplified by large models like ChatGPT, might truly usher us into the age of intelligence, signifying the onset of the next industrial revolution.

There are numerous viewpoints within the industry regarding this. For instance, it’s crucial to keep up with the trends of the AI era. As NVIDIA suggests, we are currently in the AI’s “iPhone era.” Li Kaifu mentioned that all applications will be restructured through AI 2.0, which refers to the current large models, AIGC models. This includes applications like DingTalk, where AI will likely support all business capabilities. AI can perform many tasks such as generating speech, singing, coding, aiding in creating presentations, and more. However, to perform these tasks effectively, we need substantial computational power. Robust computational power is pivotal for ensuring stability and efficiency, and data centers play a vital role in providing the infrastructure for this.

The chart in the upper right corner displays NVIDIA’s own model, demonstrating that only 48 GPU servers are needed to complete tasks that previously required multiple CPUs. However, the application of this technology has brought about some issues. The power consumption of a single server has reached approximately 8 kilowatts, and in many Chinese data centers, air cooling methods cannot satisfy such high-density cooling demands. You might wonder, why not decrease server density and place only one server per rack? While this might be feasible, it would trigger other issues, such as a significant increase in network investments. You might not have noticed yet, but alongside the AI trend, a previously less-understood industry has also been ignited — the optoelectronic module and optical communication industry. Stocks in these industries might experience higher surges than those of model development companies or NVIDIA. This is because, in many scenarios, cabinets previously connected by copper cables are now connected by optical fibers, greatly increasing the demand for optoelectronic modules.

In conclusion, with the advent of new-generation AI technologies like ChatGPT, the development of liquid cooling technology is opportune and timely. Moreover, AI is currently primarily used in the research and development of training large models, but it will gradually move into the phase of large-scale application.

Previously, in the traditional sense, it was believed that AI training could be accomplished using a single device equipped with 8 high-performance GPUs, utilizing liquid cooling technology to address the issues. However, only a few participants could afford the cost of such devices in this scenario. Additionally, to train a large model, a considerable number of devices are needed, but the total number of clusters that can be built is limited.

However, if all applications require AI for inference and application, every server will become an AI server equipped with GPUs. In situations where there were no large models before, only inserting a small GPU like the A10 card might be necessary for inference, and air cooling would suffice. Yet, with the presence of large models today, you must use 8 A100 cards, necessitating liquid cooling technology.

The timing is right. In fact, the entire liquid cooling industry has undergone a decade of development — starting from the initial GRC, the first solution dedicated to solving data center liquid cooling issues, has been around for a decade. Of course, it’s still an era of diverse technological advancements.