How do Data Centers Support High-Performance Computing?

Data centers have been in existence since the 1940s, with the first dedicated computer rooms used for military purposes. As computing and storage demands exponentially grew over the following decades, applications expanded into various domains of life, and organizations increasingly sought dedicated data centers to accommodate their infrastructure.

To reduce costs and stay competitive, outsourcing data center infrastructure has become almost essential since the emergence of High-Performance Computing (HPC). HPC is a powerful solution that requires high density, heat, and bandwidth. These data centers need to address the challenges posed by the heat and power density required for running multiple high-performance computers simultaneously.

Given that HPC enables faster integration of data analytics and artificial intelligence, it comes as no surprise that leading companies adopting HPC data centers are predominantly in the cloud computing and IT industries. However, companies from other industries can also harness the power of HPC.

These may include:

- Research Laboratories

- Financial Technology

- Weather Forecasting

- Media and Entertainment

- Healthcare

- Government and Defense

Data centers supporting HPC can meet the growing demand for fast networking while keeping up with the increasingly digitized landscape.

The Three Key Systems of HPC To build an infrastructure that caters to HPC, it is crucial to understand the three key systems of an HPC cluster: computing, storage, and networking.

Computing

Efficient HPC systems require a set of computer services and software programs that work together to execute algorithmic programs. Each module needs to stay synchronized with the other modules in the cluster; otherwise, the entire HPC system becomes outdated.

The goal of HPC is to perform high-speed computations, which requires aggregating computing capabilities from different hardware types. Data centers have the necessary space and capacity to accommodate the computing systems and hardware required to support HPC operations, which often involve power and cooling coordination beyond what most enterprises can handle.

Storage

To accommodate the massive amount of data processed by HPC, the storage system should offload data from the CPUs as frequently as possible without interrupting the computational operations. According to Weka, HPC storage systems need to meet the following requirements:

- Data from any node should be available at all times.

- Available data must be the most up-to-date.

- Capable of handling data requests of any size.

- Support performance-oriented protocols.

- Utilize the latest storage technologies (e.g., SSD).

- Scale to milliseconds to keep up with constant latency.

Networking

The network topology of HPC is significantly different from internal office networks. In addition to the extreme demands of continuous data transfer between CPUs and storage, many different computational components that make up the HPC environment are treated as one computer and are combined in a “fabric.” “The key concept of HPC fabric is having a large amount of scalable bandwidth (throughput) while maintaining ultra-low latency.”

Cooling Facilities

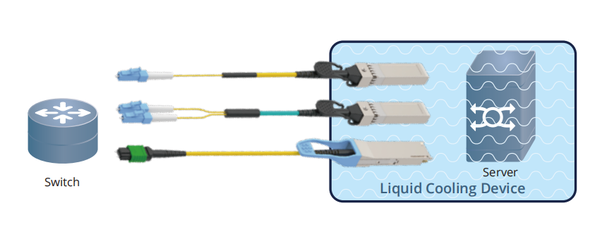

Considering the density and heat generated by HPC infrastructure, cooling can be a significant challenge. Traditional hot-aisle containment systems used in modern data centers can effectively cool today’s 50kW HPC racks. Looking ahead, HPC clusters may increase in density and drive more widespread adoption of liquid cooling, which offers cooling capacities up to 1,000 times greater than air cooling, with a smaller physical footprint. Immersion cooling deployments offer higher flexibility and cater to future customers.

Take GIGALIGHT’s innovative liquid-cooled optical interconnect modules and solutions as an example. The liquid-cooled high-speed modules can stably operate in fluorocarbon liquids and mineral oil up to 1 meter deep (certified through long-term customer validation). Compared to traditional cooling solutions, they provide higher heat dissipation efficiency and lower energy consumption, taking the computational power of high-performance computing to a new level.

High-Performance Components

High-performance computing data centers are built with high-quality systems, components, and facilities to meet the demands of HPC. These data centers provide affordable power, network, scalability, redundancy, and security required for HPC. Therefore, HPC clusters require high-performance parallel interconnect components to connect the devices.

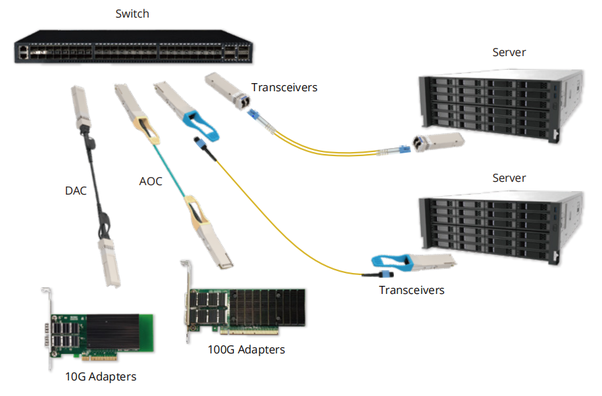

GIGALIGHT has been a pioneer in parallel optical interconnect computing since 2013. We have been dedicated to the development of high-performance parallel optical modules and interconnect cables. Our product series covers various speeds, including 10G, 25G, 40G, 100G, 200G, 400G, and 800G, and support the INFNIBAND protocol.

Here are some key components that support parallel interconnects in HPC data centers:

– Server optical network cards based on Intel and NVIDIA chip designs, supporting parallel interconnects from 10G to 200G and extending towards 400G/800G.

– High-speed parallel optical modules designed based on VCSEL lasers, DML lasers, or silicon photonics platforms, such as 100G QSFP28 SR4/PSM4, 200G QSFP56 SR4/DR4, 200G QSFP-DD SR8/PSM8, and 400G QSFP-DD SR8/DR4.

– Short-reach parallel DAC (Direct Attach Copper) and AOC (Active Optical Cable) interconnect cables designed with low power consumption, including 400G QSFP-DD DAC/AOC, 800G QSFP-DD DAC/AOC, etc.

– Electric loopback modules that support self-loopback testing of system devices.

– Innovative liquid-cooled interconnect optical modules and solutions.

These high-quality components, along with the overall system and facilities, work together to create high-performance computing data centers that meet the requirements of HPC.